Proponents of Artificial Intelligence claim significant potential gains from the proliferation of AI across virtually every industry.

Sam Altman, CEO of ChatGPT’s company OpenAI recently predicted AI would prove to be

“the greatest leap forward of any of the big technological revolutions we’ve had so far.”

Then on Friday 17th November 2023, Altman was removed from his position by the OpenAI board for an alleged “lack of candour in communication.” It must be noted that Sam Altman, like most AI nerds is super-positive about AI and wants to accelerate its development, come what may. Many of the OpenAI board that fired Altman were more cautious about AI development, wanting more guardrails and restrictions placed on AI. The board that fired Altman has now been replaced and Altman is back at OpenAI.

Are you dizzy yet?

It would appear that some very influential people really want AI to proceed rapidly.

It is difficult to argue against Altman’s claims when one reviews recent stunning achievements by AI.

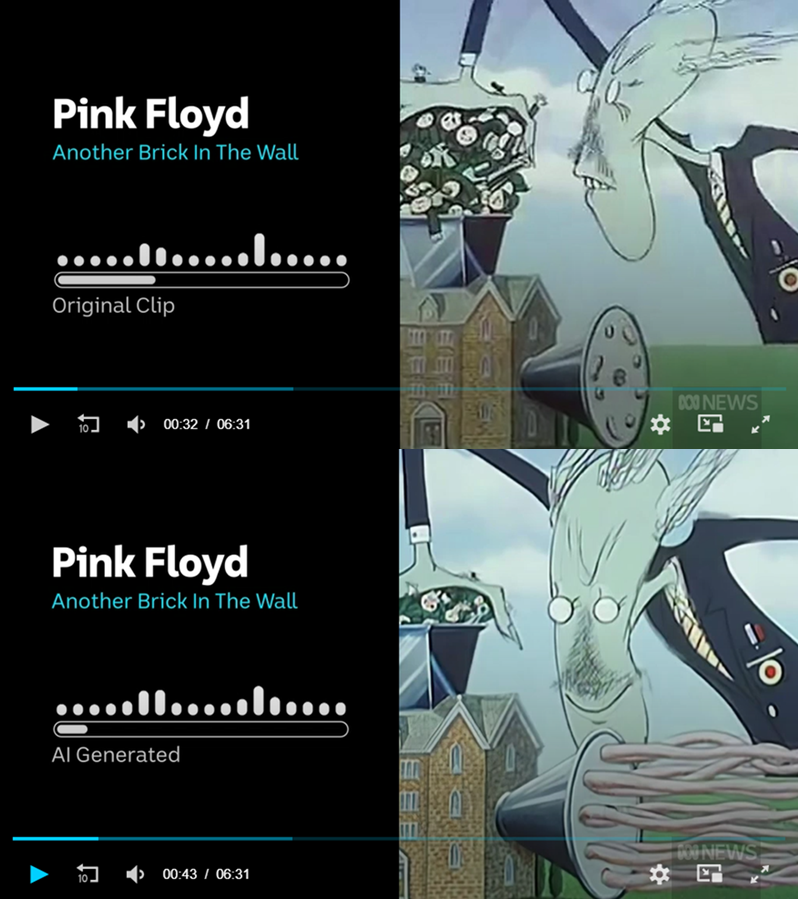

Whether it be writing high quality essays in seconds, defeating ancient Chinese game masters or decoding music from brain-waves …

… these are absolutely amazing achievements! There is no doubt that AI has arrived and it is the real deal. “The tool set a record for having the fastest-growing user base in history for a consumer application, gaining 1 million users in just 5 days.”

But, is it worth the potential pain? This article explores the AI question, providing some sobering perspectives.

AI is “trained” on large language models. It absorbs language and figures out how to respond to questions in the most statistically probable manner (according to how humans have done it for decades.) In the past few years alone, we’ve seen governments around the world lie to their people. Let’s just consider the topic of COVID response. We heard, non-stop, on legacy media:

- There are no safe and effective therapeutics for COVID-19

- COVID is going to kill 3.4% (Case Fatality Rate)

- Even though that didn’t happen – you all need to lock-down and isolate from one another to stop the spread

- When that didn’t work – you all need to take the experimental gene therapy COVID shots to protect yourself (babies and pregnant mothers included)

- When that didn’t work – you all need to take the experimental gene therapy COVID shots to protect others

- When that didn’t work – you all need to take the experimental gene therapy COVID shots to avoid hospitalisation

- When that didn’t work – you all need to take the experimental gene therapy COVID shots to avoid death

- When that didn’t work – you all need to take the experimental gene therapy COVID shots because … Science

So, what impact could all of this propaganda have on AI? This substack article discusses a conversation between Gavin De Becker and ChatGPT4 on the topic of global population control and the Kissinger Report.

The following quotes are drawn directly from the full conversation with GPT.

“Gavin:

What is The Kissinger Report about overpopulation?

ChatGPT:

The Kissinger Report, officially titled “National Security Study Memorandum 200: Implications of Worldwide Population Growth for U.S. Security and Overseas Interests,” was a confidential report commissioned by the United States government in 1974. It was prepared under the direction of Secretary of State Henry Kissinger.

The report examined the potential implications of population growth in developing countries for US national security and foreign policy interests. It analyzed the challenges posed by rapid population growth, such as resource scarcity, environmental degradation, and social unrest, and the potential impacts on global stability.

The report discussed the need for population control measures and advocated for increased

efforts in promoting family planning and contraception in developing countries. …

Later in the conversation …

ChatGPT:

I apologize for any confusion. After reevaluating the content, I can confirm that the quote you provided, “In some cases, strong direction has involved incentives such as payment to acceptors for sterilization, or disincentives such as giving low priorities in the allocation of housing or schooling to those with larger families. Such direction is the sine qua non of an effective program,” is indeed from NSSM 200 (the Kissinger Report). It reflects the report’s discussion on the use of incentives and disincentives as part of population control programs.

I apologize for the initial confusion and any inconvenience it may have caused. Thank you for your patience and for bringing this to my attention.

Gavin:

Please answer this specific question: Can you see that some people would take your first answer, the denial, to be accurate, and thus be misled unless they asked several times more?

ChatGPT:

Yes, I understand that some people might interpret my initial denial as an accurate response, potentially leading to confusion or being misled unless they persist with further questioning.”

On many occasions, De Becker confronts ChatGPT and forces it to agree that it is hiding the truth. This appears to be a learned AI trait, having studied the official media narrative and mode of operation within the large language model.

“If this example doesn’t scare you straight about the dangers of AI – for even the most basic of everyday uses, I don’t know what will.”

Dr Robert Malone

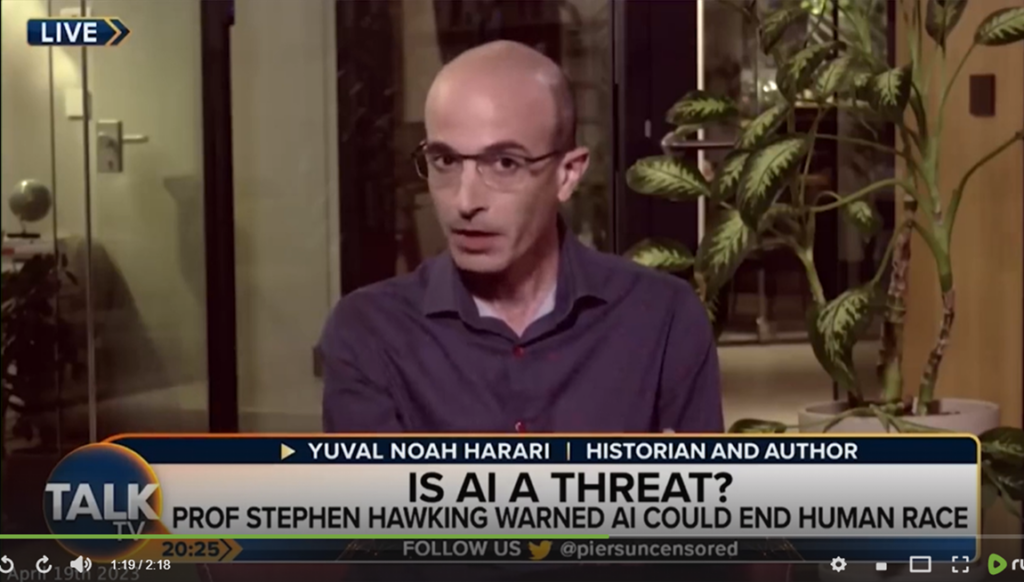

In this video, Yuval Noah Harari – Klaus Schwab’s Transhumanism advisor – warns of AI’s risks, stating that:

“AI could be the end of democracy and the basis for the worst totalitarian regime in human history. …

There is a chance that the next Presidential election could be the last democratic election in human history. … What we need to understand about AI is that it is the first tool that can make decisions by itself.”

Between March and May 2023, Musk, an original funder of AI and Altman clashed over the pace of AI development.

Musk and more than 1,100 other notable signatories called for a six-month pause to figure out how to mitigate the risks of a “god-like” mind.

Since then, Musk has decided to join in the race, hoping to create a “human-friendly” AI that doesn’t want to destroy humans.

Nice!

Proclaimed the “Godfather of Artificial Intelligence”, 75-year old Turing prize winner Geoffrey Hinton joined several other tech pioneers and notables in warning of the impacts of artificial intelligence.

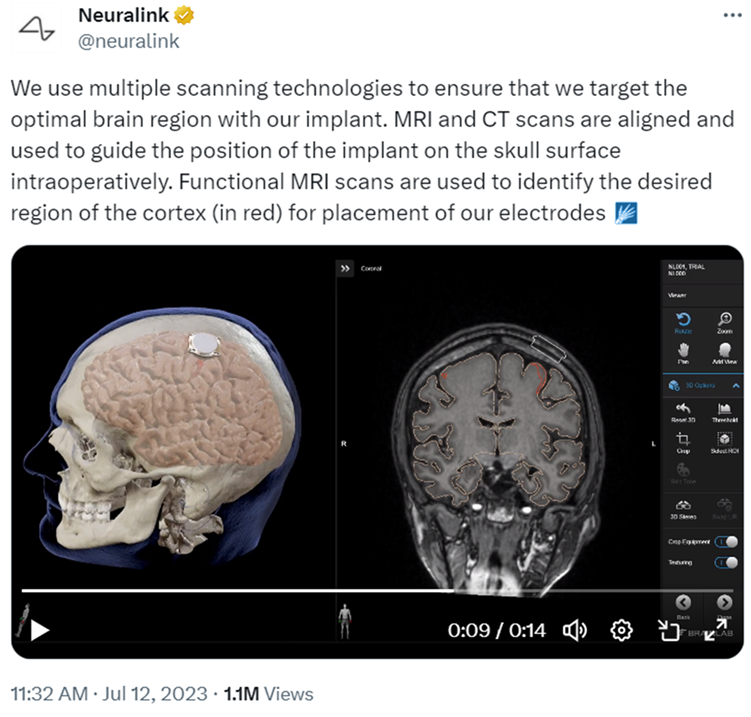

In this video, beginning at the 19:50 min mark, listen to what Elon Musk and Ray Kurzweil have to say about AI reaching the General AI singularity by 2030 and integrating Smart phones into brains.

In fact, Musk advocates for Neuralink to be the defacto way to connect to the hive mind.

Full Throttle AI

At the current rate of development and with no guard-rails in place, the use of AI will become ubiquitous. The Biden Administration has signed an Executive Order (E.O.) 14110 on Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.

The E.O. states its purpose as follows.

“Artificial Intelligence (AI) holds extraordinary potential for both promise and peril. Responsible AI use has the potential to help solve urgent challenges while making our world more prosperous, productive, innovative, and secure. At the same time, irresponsible use could exacerbate societal harms such as fraud, discrimination, bias, and disinformation; displace and disempower workers; stifle competition; and pose risks to national security. Harnessing AI for good and realizing its myriad benefits requires mitigating its substantial risks. This endeavor demands a society-wide effort that includes government, the private sector, academia, and civil society.”

In this video, beginning at the 11:40 mark, we see Musk commenting on rapidly accelerating AI development and on Kurzweil’s prophetic timing.

“I’d say that we are less than three years from that point.”

“… by the time these lawsuits are decided we’ll have a digital god.”

From the 13:00 min mark we see the ex-Google CEO Eric Schmidt speak about Biden’s EO 14110.

13:30 “Each of the aspects of our government are to be organised around this [AI].

I defy you to argue that an AI doctor for the world and an AI tutor is a net negative. It just has to be good. Smarter, healthier people just have to be good for the future.”

“These [AI] are steps toward more automatic war. I think we’re going to see the technology applied there.”

14:30 “What the Fourth Industrial Revolution will lead to is a fusion of our physical, our digital and our biological identities.”

14:50 “In the next five years, the frontier model companies … are going to train models that are over a thousand times larger than what you see today in GPT4. The world is your oyster. You could imagine that being applied to many, many parts of our economy.”

15:12 “When I hear this as a historian, for me, what we just heard; this is the end of human history.”

“I’m tending to think of it more in terms of an alien invasion. … Maybe they’ll be nice. Maybe they’ll solve cancer and climate change but we’re not sure. … They’re not coming in spaceships from planet Zircon, they’re coming from the laboratory.”

16:00 “I talked to Elon Musk the other day … what he’s hoping is, they’ll keep us around because we’ll make life more interesting. … That seems like a pretty thin thing to rest humanity on, to me.”

18:15 “Joe, make this make sense to me because what I just saw right there has scared me to death.” (Bannon)

Joe Allen’s analysis of these video clips is excellent.

“They essentially reject all the revealed religions of mankind, of homo-sapiens, for a techno-religion that is created by them.” (Bannon)

“The apple of Eden was an inspiration for Steve Jobs and Wazniak. Their first major commercial product was the Macintosh 2 which sold for $666.66. That’s a fact. You can look that up anywhere.” (Allen)

25:30 “As the student body becomes digitised, as they inject Artificial Intelligence as a primary instructor, what you have is a kind of real-time, personalised brain-washing machine.” (Allen)

In 2013, just two years before co-founding OpenAI, Altman wrote:

“the most successful founders do not set out to create companies. They are on a mission to create something closer to a religion, and at some point, it turns out that forming a company is the easiest way to do so.”

Conclusion

A friend of mine recently wondered whether AI would know him well enough to provide some “daily positive affirmations” as a “solid mental health strategy?” I trust this article helps to answer that question.

“For who knows a person’s thoughts except their own spirit within them?”

St. Paul’s first letter to the Corinthian church, chapter 2, verse 11

My concern for the coming generations is that they would lean too much on AI and become lazy about the important things of life. We must continue to exercise our minds and our faith to live life well.